Why Learning to Tell Human-Made Images from AI-Generated Ones Matters

Mr. B

Why Learning to Tell Human-Made Images from AI-Generated Ones Matters

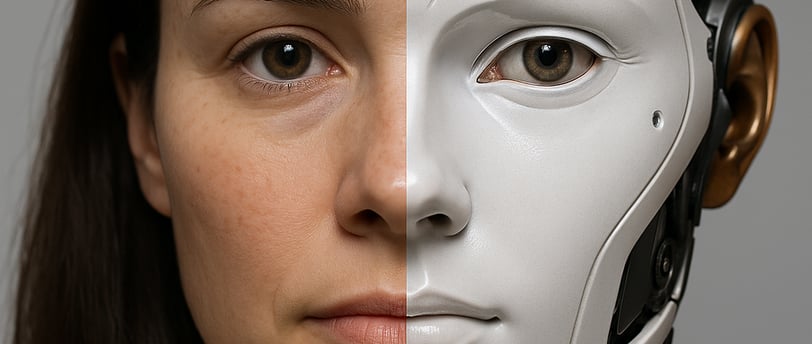

In the age of generative AI, the line between what is real and what is synthetic has never been more blurred. Tools like Midjourney, DALL·E, and Stable Diffusion are capable of producing hyper-realistic visuals—from imaginary portraits to dreamlike landscapes. But how can we tell the difference between an image taken by a human and one generated by an algorithm?

This question isn’t just philosophical. It has practical implications for journalism, digital trust, copyright protection, and online misinformation. And this is where AI image detectors come into play.

Let’s explore how these tools work, why they matter, and what you can expect from using one.

How Does an AI Image Detector Work?

At its core, an AI image detector is a classification model trained to differentiate between human-made and AI-generated images. But this task is far more complex than it may seem.

Supervised Learning at the Core

AI image detectors rely on a method called supervised learning. During training, the model is shown thousands (sometimes millions) of labeled images:

Real photos taken by humans (from smartphones, cameras, etc.)

AI-generated images created by models like Midjourney or Stable Diffusion

Each image is labeled with its source: “human” or “AI.” Over time, the model learns to recognize patterns and statistical features that correlate with each type of image.

What the AI Actually Analyzes

Unlike humans, who analyze context or artistic intent, AI models work purely with visual data. They detect patterns and anomalies that are often imperceptible to the naked eye, including:

Overly smooth or uniform textures

Slight anatomical errors (like extra fingers or asymmetrical eyes)

Unnatural lighting or depth inconsistencies

Artifacts from image synthesis, such as warping or local blur

These subtle cues allow the model to estimate whether the image likely originates from a generative AI.

The Role of Vision Transformers

Modern image detectors often use vision transformers, a deep learning architecture originally developed for natural language processing (NLP). Transformers can analyze long-range relationships within data—perfect for detecting inconsistencies across different parts of an image.

For example, a transformer might notice that the reflections in someone’s glasses don’t match the lighting in the background—a red flag for synthetic content.

Detection is Always Probabilistic

It’s important to understand that AI image detection is not absolute. The model doesn’t give a yes or no answer, but rather a confidence score.

Example: “This image is 87% likely to be AI-generated.”

Why is this probabilistic approach necessary?

Some AI images are extremely realistic

Some real photos can be misinterpreted as fake (due to filters or compression)

There are edge cases and intentional manipulations

This is why responsible use of AI detection tools requires interpreting the output as an informed estimate—not a definitive judgment.

Current Limitations

AI detectors are improving rapidly, but they still have limits:

AI generators are evolving fast and learning from their past mistakes

Compression and editing (e.g., applying filters or cropping) can reduce detection accuracy

Collaged or edited images may combine real and fake elements, making them harder to classify

Some AI tools can now mimic even photographic imperfections, fooling traditional detectors

In short, the battle between generation and detection is an ongoing arms race.

The Future of Image Verification

To strengthen digital trust, new strategies are emerging:

Watermarking AI-generated content, either visibly or invisibly

Embedding cryptographic metadata into files

Developing browser-level verification of image sources

Promoting content authenticity initiatives like C2PA (Coalition for Content Provenance and Authenticity)

Until these technologies become mainstream, AI image detectors remain one of the most accessible and effective tools to determine image origin.

Our Tool: A Simple and Powerful Solution

To support users navigating this new visual landscape, we’ve built a free online AI image detector. Here's what you can expect:

No registration required

Just upload an image to get an instant result

A clear answer: “Likely Human” or “Likely AI,” with a confidence score

No data stored – we respect user privacy

Powered by a robust deep learning model trained on thousands of examples

Whether you’re a journalist, designer, teacher, or simply curious, this tool helps you take back control of how you understand and trust visual content online.

Why It Matters

Being able to tell human-made images from AI-generated ones is no longer optional—it’s essential.

Fighting misinformation: Detect fake news visuals or deepfakes

Protecting artists: Ensure credit goes to human creators when due

Educational awareness: Teach critical thinking in a world of generative media

Supporting transparency: Know when you’re seeing a machine’s output

The rise of synthetic media challenges our perception, but it also offers a chance to reinforce digital literacy and ethical AI use.

Conclusion

AI may challenge our eyes, but it shouldn’t compromise our judgment. As generative models continue to evolve, learning how to detect them becomes a fundamental skill for anyone navigating the digital world.

With the right knowledge and tools, you can spot the difference, stay informed, and protect what’s real.

👉 Try our AI Image Detector today and see for yourself.

Check you image

Find out whether an image is human-made or AI-generated.

© 2025. All rights reserved.